Rediscovering Intelligence:

Can AI Still Learn from Humans?

ReLearn studies the relationship between human and machine intelligence, exploring how cognitive and psychological insights can still guide the future of AI.

Overview

As AI capabilities surge, ReLearn asks whether machines can still learn from humans and how cognitive science can shape the next generation of systems.

We bridge computer vision, cognitive science, and psychology to study reasoning, social understanding, and hybrid learning that blends human insight with autonomous discovery.

Key Themes

- Human-inspired foundations of reasoning and Theory of Mind

- Learning with humans via feedback and interaction

- Beyond the human blueprint through self-supervision

Important Dates

Invited Speakers

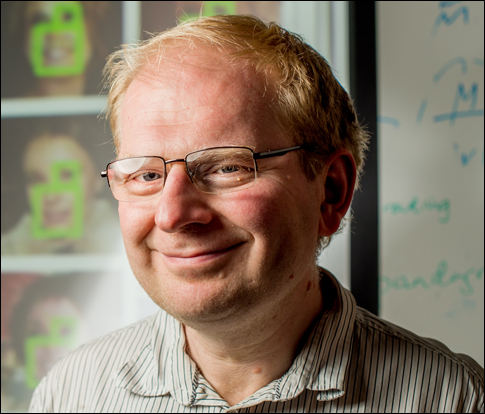

Alexei (Alyosha) Efros is a Professor in the Department of Electrical Engineering and Computer Sciences (EECS) at UC Berkeley. Prior to that, he was on the faculty of Carnegie Mellon University. His research is in the area of computer vision and computer graphics, especially at the intersection of the two. He is particularly interested in using data-driven techniques to tackle problems where large quantities of unlabeled visual data are readily available. He is a recipient of the CVPR Best Paper Award (2006), Sloan Fellowship (2008), Guggenheim Fellowship (2008), Okawa Grant (2008), SIGGRAPH Significant New Researcher Award (2010), three PAMI Helmholtz Test-of-Time Prizes (1999, 2003, 2005), the ACM Prize in Computing (2016), Diane McEntyre Award for Excellence in Teaching Computer Science (2019), Jim and Donna Gray Award for Excellence in Undergraduate Teaching of Computer Science (2023), and the PAMI Thomas S. Huang Memorial Prize (2023).

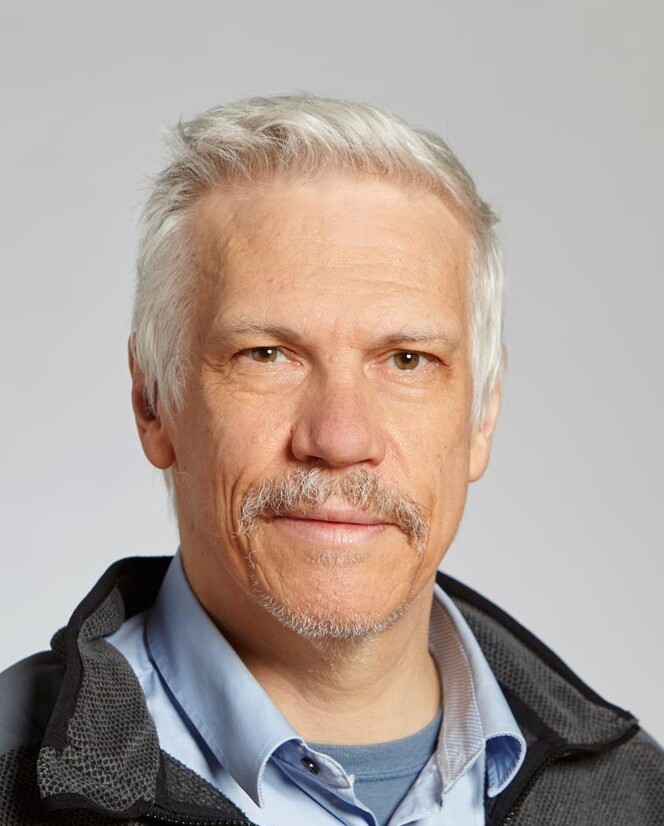

Dima Damen is a Professor of Computer Vision at the University of Bristol and Senior Research Scientist at Google DeepMind. Dima is currently an EPSRC Fellow (2020-2026), focusing her research interests in the automatic understanding of object interactions, actions and activities using wearable visual (and depth) sensors. She is best known for her leading works in Egocentric Vision, and has also contributed to novel research questions including mono-to-3D, video object segmentation, assessing action completion, domain adaptation, skill and expertise determination from video sequences, discovering task-relevant objects, dual-domain and dual-time learning, as well as multi-modal fusion using vision, audio, and language.

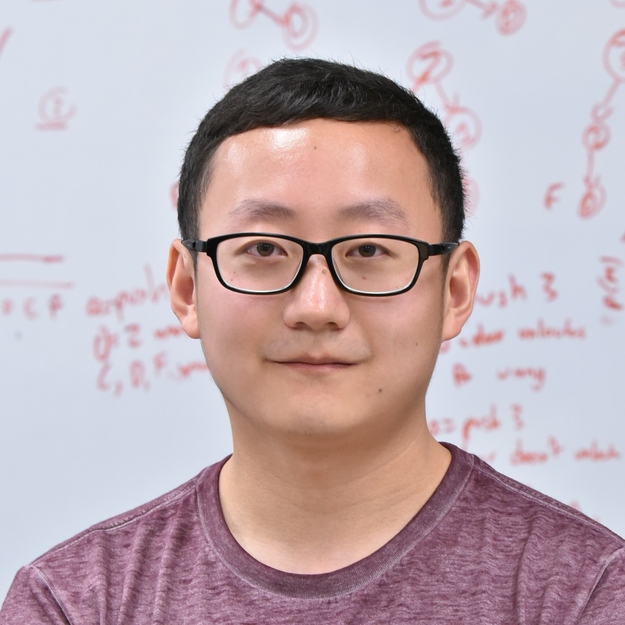

Saining Xie is an Assistant Professor of Computer Science at NYU Courant and part of the CILVR group. He is also affiliated with the NYU Center for Data Science. Before that he was a research scientist at Facebook AI Research (FAIR), Menlo Park. He received his Ph.D. and M.S. degrees from the CSE Department at UC San Diego, advised by Zhuowen Tu. During his PhD study, he also interned at NEC Labs, Adobe, Facebook, Google, and DeepMind. Prior to that, he obtained his bachelor degree from Shanghai Jiao Tong University. His primary areas of interest in research are computer vision and machine learning.

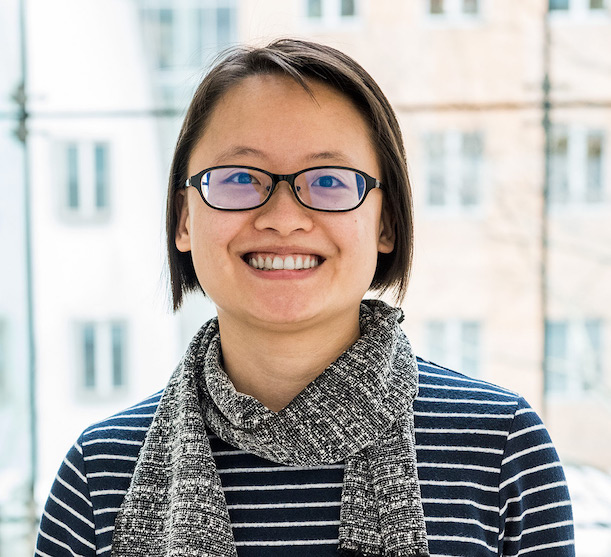

Manling Li is an Assistant Professor at Northwestern University. She was a postdoc at Stanford University and obtained the PhD degree in Computer Science at University of Illinois Urbana-Champaign in 2023. She works on the intersection of language, vision, and robotics. Her work won the ACL 24 Outstanding Paper Award, ACL 20 Best Demo Paper Award, and NAACL 21 Best Demo Paper Award. She was a recipient of the Microsoft Research PhD Fellowship in 2021, an EE CS Rising Star in 2022, and a DARPA Riser in 2022. She served as Organizing Committee of ACL 25, NAACL 25, EMNLP 24, and delivered tutorials about multimodal knowledge at IJCAI 24, CVPR 23, NAACL 22, AAAI 21, and ACL 21.

Schedule

Call for Papers

We invite papers aligned with the workshop themes, spanning human-inspired foundations, learning with humans, and hybrid intelligence.

Submissions will follow CVPR 2026 formatting and length guidelines. Accepted papers will be presented in oral/spotlight and poster formats.

Suggested Topics

- Human-inspired architectures, reasoning, and abstraction

- Theory of Mind and social understanding in AI

- Human-in-the-loop learning, feedback, and demonstrations

- Egocentric, multimodal, and embodied interaction

- Synthetic data, simulation, and self-supervised learning

- Grounded language and cognitive evaluation

- Hybrid intelligence, trust, alignment, and safety

- Ethical and philosophical perspectives on learning

Challenge

Multimodal Theory of Mind (ToM) Challenge: infer goals and beliefs from videos, textual scene descriptions, and dialogues.

Reasoning from a single agent's behavior.

Reasoning from multi-agent interactions.

Opens Jan 2026 · Submissions due May 2026.

Organizers

For inquiries regarding organization, please contact:

- Dr. Xi Wang · xi.wang@inf.ethz.ch

- Dr. Yen-Ling Kuo · ylkuo@virginia.edu